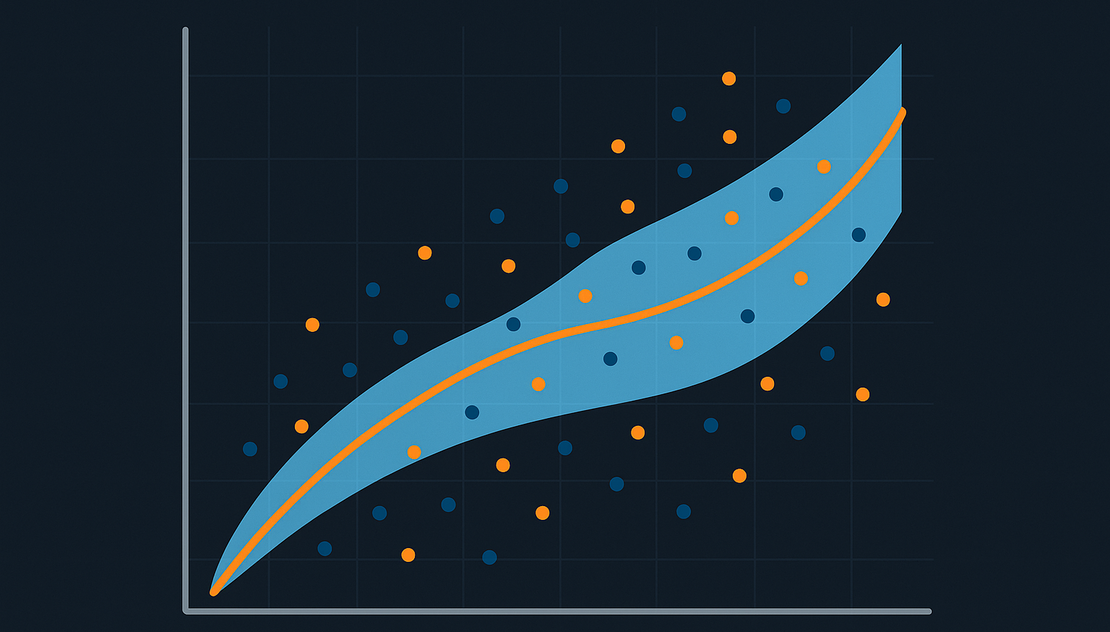

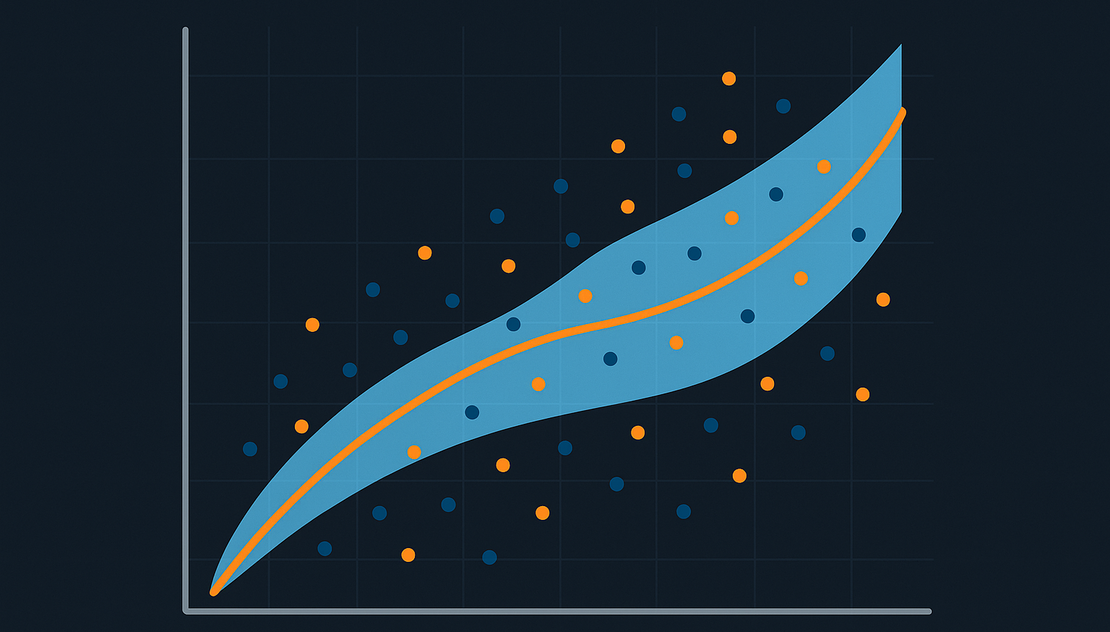

Why uncertainty matters: the true value of predictions

Machine Learning is increasingly used in business and in the management of complex systems such as energy, …

Behind every digital application lie data infrastructures that collect, transform, and make information available. Modern architectures, from data lakehouses to ELT pipelines, open new opportunities but require attention to costs and complexity. Proportionality, efficiency, and sustainability become guiding principles for systems that are truly useful and long-lasting.

When we talk about digital transformation, attention tends to focus on “spectacular” technologies: artificial intelligence, predictive algorithms, automation tools. However, behind these innovations lies a less visible but absolutely crucial discipline: data engineering.

A good analogy is the power grid or the internet: no one looks at them directly, but we immediately notice when they stop working. In the same way, without data engineering we would not have advanced analytics or data-driven artificial intelligence systems.

According to IBM, data engineering is the practice of designing and building systems for the aggregation, storage, and analysis of large volumes of data. This work underpins both decision-making processes and the training of machine learning models.

The main activities include:

For a long time, the reference model was ETL, with centralized architectures such as data warehouses, where data was loaded only after being cleaned, aggregated, structured, and optimized. This choice ensured power and speed, but also introduced rigidity and inefficiencies: data duplication, repeated and unnecessary calculations, management overhead.

In recent years, the scenario has changed. Today, the preferred model is ELT (Extract-Load-Transform): data is extracted and immediately saved in the final container, then transformed only when needed.

This evolution is directly linked to the transition from data warehouse to data lakehouse:

📌 Note: However, there are disadvantages. The greater flexibility of lakehouses can lead to higher management complexity, unexpected costs for orchestrating the various layers, and the need for advanced technical skills.

Behind every “cloud,” where data is hosted and managed, there is a physical infrastructure: data centers. These facilities have also become a focus of attention due to their environmental impact.

The Environment, Social and Governance Report 2025 by Structure Research highlights significant data:

This increase in consumption and emissions has driven the search for countermeasures: emerging solutions such as closed-loop cooling systems or recovering data center heat for urban heating are becoming integral parts of the sector’s sustainability strategies.

➡️ In summary, the data show steady growth in consumption and emissions, only partially mitigated by advances in efficiency and sustainability of data centers.

Another myth to be scaled down concerns Big Data.

According to an analysis of data published by Snowflake and Redshift:

👉 This means that “Big Data” concerns a minority of users. In everyday practice, most organizations work with much smaller volumes. It is therefore no surprise that there is increasing talk of Small Data: smaller, but more targeted and meaningful.

From the analysis, some guiding principles emerge for the future of data engineering:

Ultimately, data engineering should no longer be seen only as a set of technical tools, but as a discipline that combines engineering precision, environmental sustainability, and economic efficiency.

🔍 Do we really need a “Big Data” infrastructure, or are our datasets actually “Small”?

⚖️ Is the chosen architecture proportionate to the problem, or are we at risk of oversizing and unnecessary costs?

🌱 Have the environmental implications (energy consumption, water use, emissions) been considered?

⚡ Are the pipelines optimized to reduce computational and memory waste?

🛠️ Is the solution agile enough to adapt to future changes in scale?

💰 Have we estimated the overhead costs (e.g., services running 24/7 unnecessarily)?

👉 Answering these questions means not only having more sustainable and higher-performing systems, but also containing costs and reducing strategic risks.

Machine Learning is increasingly used in business and in the management of complex systems such as energy, …

Batteries reaching the end of their life are not necessarily waste: they can open new market opportunities and …